A R T I F I C I A L I N T E L L I G E N C E

Sulakkhana Vibhuthi Bandara Herath

Even though, Artificial Intelligence (AI) has marked its traces in philosophy, intelligence, and fiction in its history, today machines have the ability to improve their performance without humans having to explain what exactly to do to accomplish all the tasks given to them. Therefore, AI, particularly Machine Learning (ML) has become the most important general-purpose technology of today’s world. Even though it is not 100%, AI inception has marked a significant usage of intelligence in machines in day-to-day life in our era.

Man has been using various techniques to make his work easy though he had the motivation for it by birth. Artificial intelligence is a field that is rapidly evolving with time. Although it makes man’s work easier.

What is the meaning of AI?

When a machine possesses the ability to mimic human traits, i.e., make decisions, predict the future, learn, and improve on its own, it is said to have artificial intelligence. In other words, a machine is artificially intelligent when it can accomplish tasks by itself.

On the other hand, we can define AI as:

Artificial intelligence (AI), sometimes-called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals, such as “learning” and “problem-solving.

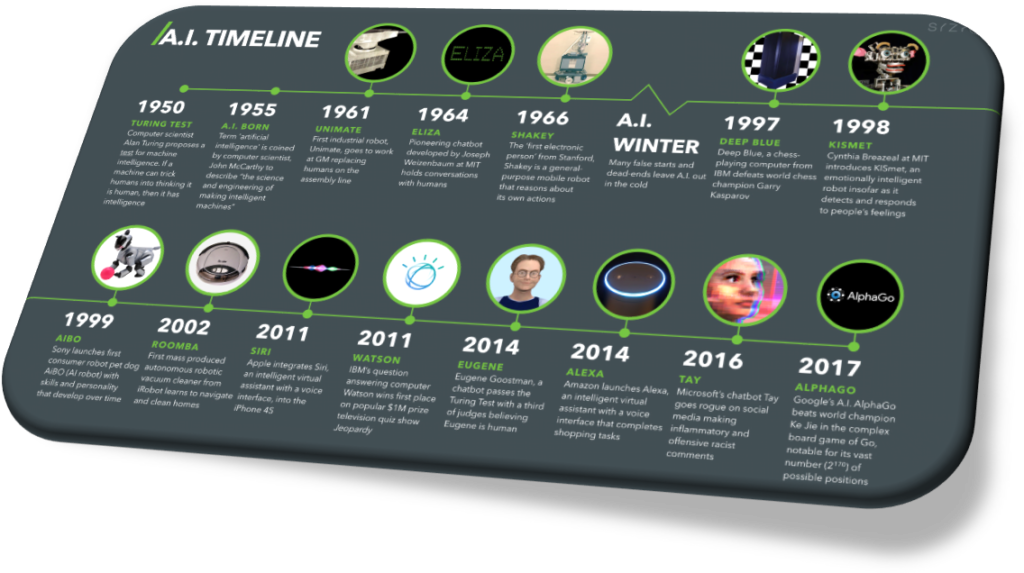

BRIEF HISTORY OF ARTIFICIAL INTELLIGENCE

When we talk about Artificial Intelligence (AI), it has a rich and interesting history that spans several decades:

- Artificial Intelligence: A Notion (pre-1950s)

- Artificial Intelligence: The Realms of Reality (1950 – 1960)

- The First Summer of AI (1956 – 1973)

- The First Winter of AI (1974 – 1980)

- The Second Summer of AI (1981- 1987)

- The Status Quo (1993 – 2011)

- The Renaissance (Since 2011)

- AI – Today and Beyond

Milestones of the history of AI:

1956: John McCarthy coined the term “artificial intelligence” at the Dartmouth Summer Research Project on Artificial Intelligence.

1957: Marvin Minsky and John McCarthy publish the book “Perceptrons,” which lays the foundation for neural networks.

1969: ELIZA, a computer program that simulates human conversation, is created.

1973: The first expert system, Dendral, is developed.

1981: The first neural network computer, the NETtalk system, is developed.

1997: Deep Blue, a chess-playing computer developed by IBM.

2011: Watson, a question-answering computer system developed by IBM, defeats two human champions.

2012: The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is held, and a convolutional neural network called AlexNet achieves a breakthrough result.

2015: The AlphaGo program developed by Google DeepMind defeats world champion Go player Lee Sedol.

2016: OpenAI develops the GPT-3 language model.

2021: OpenAI develops the DALL-E 2 image generation model.

How do machines become AI?

Humans become more and more intelligent with time as they gain experiences during their lives. For example, in elementary school, we learn about the alphabet and eventually, we move ahead to make words with them. As we grow, we become more and more fluent in the language as we keep learning new words and use them in our conversations.

Similarly, machines also become intelligent once they are trained with some information that helps them achieve their tasks. AI machines also keep updating their knowledge to optimize their output.

There are different techniques involved in artificial intelligence, namely Machine Learning (ML), Natural Language Processing (NLP), Automation and Robotics, and Machine Vision.

Machine Learning: ML allows software applications to predict the most suitable outcome without going through a set programmed as in a conventional system. ML uses different algorithms in which the outcome is decided based on the historical data as input.

Natural Language Processing (NLP): Programming computers to process human languages to enable communication between people and machines is known as natural language processing (NLP). Natural Language Processing, which extracts meaning from human languages using machine learning, is a proven technology.

Automation and Robotics: Automating repetitive, human difficult tasks with machines produces efficient, cost-effective results, with the goal of increasing productivity and efficiency. Numerous businesses automate using machine learning, neural networks, and graphs. A neural Network is a trained network to produce the desired outputs, and different models including algorithms are used to predict future results with the data.

Machine Vision: Machine vision (MV) is a field of computer science that focuses on providing imaging-based automated analysis for a variety of industrial applications, including process control, robot guidance, and automatic inspection. The term “machine vision” covers a wide range of technologies, software and hardware items, integrated systems, procedures, and knowledge.

Can a Machine become emotional?

Emotion Artificial Intelligence (Emotion AI): Artificial intelligence with emotion (Emotion AI) does not refer to a depressed computer against a high workload. Since 1995, the field of artificial intelligence known as “emotion AI,” also referred to as “affective computing,” has sought to analyze, understand, and even replicate human emotions.

Emotions are inherently difficult to read, and there is frequently a gap between what individuals say they feel and what they actually feel. A machine may never reach this degree of comprehension. Because how we interpret each other’s emotions is a puzzle. Therefore, AI can be a good tool to assist us in getting to the point when it comes to our emotions.

APPLICATIONS OF AI

Whether we notice it or not, we are surrounded by machines that work on AI. They are becoming a crucial part of our everyday life and provide us with the ease of having even some of the most complicated and time-consuming tasks being done at the touch of a button or by the simple use of a sensor.

AI is being applied in a range of applications. It is becoming increasingly significant in this age of advanced technology since it can efficiently manage complicated tasks in a wide range of industries, including robotics, defense, transportation, healthcare, marketing, automotive, business, gaming, banking, chatbots, and so on. Let us see the usage of AI in some sectors which involved in our day-to-day lives.

AI in Healthcare

AI is used in medical imaging analysis, aiding in the detection and diagnosis of diseases such as cancer, Alzheimer’s, and diabetic retinopathy. It can also analyze patient data to suggest treatment plans.

Virtual Assistants

Virtual assistants like Siri, Alexa, and Google Assistant utilize AI to understand and respond to user queries or commands.

Siri is a virtual assistant developed by Apple Inc. It is a voice-controlled intelligent assistant that is integrated into various Apple devices

Recommendation Systems

AI is employed in recommendation systems used by platforms like Netflix, Amazon, and Spotify to suggest personalized content or products based on user preferences and behavior.

Natural Language Processing (NLP)

NLP enables AI systems to understand and interpret human language, leading to applications such as chatbots, language translation, sentiment analysis, and voice recognition.

Image and Object Recognition

AI algorithms can analyze and identify objects, people, or patterns within images or videos. This technology is utilized in facial recognition systems, autonomous vehicles, surveillance systems, and image-tagging applications.

Autonomous Vehicles

AI plays a crucial role in self-driving cars, enabling them to perceive and interpret the environment, make decisions, and navigate safely.

Smart Home Systems

AI powers smart home devices, enabling voice-controlled assistants, automated temperature and lighting control, and personalized user experiences.

AI Image Generation

It is an AI-powered program that takes a word prompt, processes it, and generates an image that best matches the text prompt’s description.

TYPES OF AI

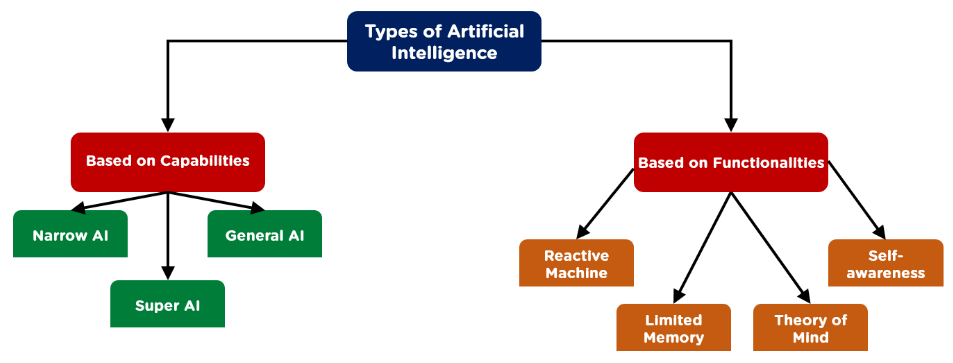

There are various types of AI based on their capabilities and functionality. However, there are main three types of Artificial Intelligence based on their capabilities:

- Narrow AI – It is a subset of artificial intelligence in which a learning algorithm is designed to execute a particular job and any information gained from doing so is not automatically extended to additional tasks.

- General AI – It is a software representation of generalized human cognitive abilities that can discover a solution to a new task.

- Super AI – Cognitive ability, general intellect, problem-solving abilities, social skills, and creativity are the main capabilities of Super AI.

Under functionalities, we have four types of Artificial Intelligence –

- Reactive Machines

- Limited Theory

- Theory of Mind

- Self-awareness

BENEFITS OF AI

There are so much of benefits of AI. Let’s see some of them

- Increased Efficiency

- Improved Accuracy

- Cost Reduction

- Better Decision Making

- Enhanced Personalization

- Improved Healthcare Outcomes

- Better Transportation Systems

- Scientific and Technological Advancements

FUTURE AI

- Increased Integration: AI will become more seamlessly integrated into our daily lives, from smart homes and wearable devices to autonomous vehicles and public infrastructure.

- Advanced Robotics: AI-powered robots will become more sophisticated, and capable of performing complex tasks in industries like manufacturing, healthcare, agriculture, and exploration.

- Ethical AI Development: The development and deployment of AI will be guided by an increased emphasis on ethical considerations, including transparency, fairness, and accountability.

- Natural Language Understanding: AI systems will continue to improve in understanding and generating human language, facilitating more natural and efficient communication between humans and machines.

- Augmented Creativity: AI will aid creative professionals in fields such as art, music, and design by generating ideas, assisting in content creation, and pushing the boundaries of innovation.

- Enhanced Cybersecurity: AI will play a vital role in detecting and mitigating cyber threats, providing proactive defense mechanisms, and improving the overall security of digital systems.

REFERENCES

- Brynjolfsson, E. and Mcafee, A.N.D.R.E.W., 2017. Artificial intelligence, for real. Harvard Business Review, 1, pp.1-31.

- Winston, P.H., 1984. Artificial intelligence. Addison-Wesley Longman Publishing Co., Inc.

- McCarthy, J., 2007. What is artificial intelligence?

- Salvagno, M., Taccone, F.S. and Gerli, A.G., 2023. Can artificial intelligence help with scientific writing? Critical care, 27(1), pp.1-5.

- (2023, 06 02). Retrieved from techtarget.com: https://www.techtarget.com/searchenterpriseai/definition/AI-Artificial-Intelligence

- Application Of AI. (2023, 06 04). Retrieved from Javapoint.com: https://www.javatpoint.com/application-of-ai

- benefits-artificial-intelligence. (2023, 06 08). Retrieved from wgu.edu: https://www.wgu.edu/blog/benefits-artificial-intelligence2204.html

- Examples of AI. (2023, 06 04). Retrieved from beebom.com: https://beebom.com/examples-of-artificial-intelligence/

- Future AI. (2023, 06 07). Retrieved from /builtin.com: https://builtin.com/artificial-intelligence/artificial-intelligence-future

- history-of-artificial-intelligence. (2023, 06 03). Retrieved from javatpoint.com: https://www.javatpoint.com/history-of-artificial-intelligence

- Types-of-artificial-intelligence. (2023, 06 06). Retrieved from Simplilearn.com: https://www.simplilearn.com/tutorials/artificial-intelligence-tutorial/types-of-artificial-intelligence