In March 2019, the CEO of a UK-based energy firm received a phone call from Germany which he believed from his boss, asking him to immediately transfer €220,000 to the bank account of a Hungarian supplier (Stupp 2019).The CEO followed the order of his boss and transferred the money. On the same day, he received another call again impersonating his boss, but this time the call was from Australia, and therefore, the CEO was suspicious, so he informed the police, and realized that he was scammed. The scammerhassuccessfully impersonated the boss’s voice using Artificial Intelligence (AI)techniques and convinced the CEO to transfer the money. In the phone conversation, the CEO even recognizes his boss’s slight German accent in his voice. Imagine an impersonated video call from his boss with all the facial expressions instead of a voice call, that would not have given any doubt at all to the CEO. This is just one of the examples that showshow a DeepFake can fool humans, and how serious its implications are.

The term “DeepFake” is first emerged in 2017 by a Reddituser whoenjoyscreating pornographic videos. This user used AI algorithms on his home computer to replace the faces of pornographic stars in pornographic videos with the faces of celebrities as if the celebrities are performing in those videos, and uploaded them.DeepFakes, as the name implies, are fake, but super-realistic AI synthesized content such as images, videos, texts, or any other forgeries. Figure 1 shows some examplesof fake face images generated by AI.

Figure 1: Examples of photorealistic fake faces generated by AI. These images do not represent realhuman faces but are generated by AI (Image from(Karras 2016)).

How are Deep Fakes generated?

Most DeepFakes are created by Generative Adversarial Networks (GANs)(Kelly2021) – a Deep Learning (DL) technique which is originally proposed by IanGoodfellow and his team in 2014 (Goodfellow2014)DL is a subfield of AI, which is based on a deep version (multiple layers) of Artificial Neural Networks, mimicking the way the human brain works. GAN contains two parts: a generator and a discriminator. The generator generatesDeepFakes, and the discriminator, on the other hand,identifiesfakes (i.e., generated by the generator) from the real ones. Both of them are trained in an adversarial manner.

In a real-world scenario, let’s assume that I am (the generator) trying to generate a fake signature of yours. In the beginning, the signature I generated could look far different from your one, and therefore, you (the discriminator) can easily identify that it is a fake one. However, as my main aim is to fool you, over time I will be improving my skills. At the same time, you also will be improving your skills on discriminating fake signatures from the real ones often as I will be showing the signature that I generated to you. However, eventually, I will become an expert in generating your signature, and at that point, you will no longer be able to distinguish a signature given to you is generated by me or is signed by you. This explains the basic idea of GANs.

Although generating forgeries with the aid of computers isnot new, the forgeries generated by GANs are more realistic and convincing than the previously generated ones.

Benefits of DeepFakes

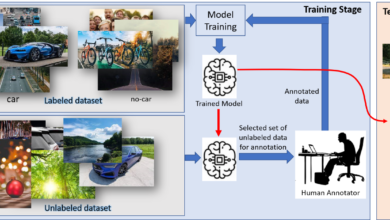

GANs have a wide range of applications due to their ability to generate realistic DeepFakes. These applications includedata augmentation to train DL models, image-to-image translations, image Inpainting, image super-resolution, text-to-image synthesis, etc.

DL algorithms are usually data hungry – they require a large amount of data to train them. Collecting a large amount of data is a difficult, time-consuming, and expensive procedure, particularly inthe field of medical image analysis. But by the use of GANs new data can be generated and added with the existing data to train the DL models, and the models that are trained in this way generally produce better results than the models trained using only the original training data.

The purpose of image-to-image translation is to transfer the style of the images while preserving their contents unchanged. This includes generating colored images from their black and white counterparts, cartooning photographs, generating photographs from painting, converting day-time images to night-time images, and vice-versa, changingthe emotions of given face images (e.g., happy face to sad face). With image Inpainting, we can restore adamaged photograph with missing pixels into a complete, realistic-looking undamaged image.Usually, when we upscale low-resolution images their details will be blurred. To overcome this, GANs provide ways to get high-resolution images from their low-resolution versions. GANs also can be used to generate images from text descriptions (e.g., “a white bird with brown wings”).

Possible threats byDeepFakes

Although DeepFakes provide a wide range of advantages, their threats are inevitable. Because of the availability of the source codes and software freely available online, almost anyone with some technical knowledge can generate DeepFakes and misuse it in different ways. This includes the generation of forgeries such as signatures, handwrittendocuments, etc., to the generation of humorous, pornographic, or political videos of a person saying or doing something that he/she never said or did. The following are only a few possible threats among them.

- Generation of fake evidence: Signatures and handwritten documents are some formsof evidencepeople use in their day-to-day life and at the courts. This evidence can be generated byDeepFaketechniques andcan be misused as if they were real.

- Fake news generation: The generation and distribution of fake news with false information in the form of texts or audio-visuals can quickly spread through social media and caneasily reach and impact millions of users. A convincing video of a politician or a military person engaged in a crime, or giving some racist comments will certainly leadto harmfulconsequences in society. These videos can be generated by a range of parties for different purposes, which include terrorist organizations to make disturbances in the society, politicians or foreign intelligence agencies for propaganda purposes and to disturb the election campaigns or to skew the voters’ opinion on the election, or even it can be created bysomeone forentertainment.

- Denial of genuine content: Due to DeepFakes the authenticity of the audio-visual footageis going to be in question. Even a criminal can deny that the evidence related to the crime isDeepFakes, and the officials/politicians engaged in corruption can now be relaxed and whenever there is video footage of getting bribery,it can be claimedas deep fake.This will reduce the trust people have in the law-enforcing officers and the law of the country.

- Stock market manipulation:DeepFake videos can also be created for stock market manipulation. Recently, we have seen in the news that the Coca-Cola shares dropped by around $4 billion after Cristiano Ronaldo’s gesture to drink water instead of Coca-Cola at the Euro 2020 press conference (Gilbert 2021).DeepFakes can be created to promote or demote products.

- Pornography: This includes the creation of celebrity porn and revenge porn, where the faces of the actors in the pornography videos are swapped with the faces of the targeted individuals without their consent. These generated videos can also be used for blackmailing purposes.

How to identify Deep Fakes?

Although, most of the DeepFakes are currently identified by human experts, there are some tools which are already proposed for the identification of DeepFakes. For example, a tool developed at the University at Buffalo (Khanna2021) claims that it can detect DeepFakes at an accuracy of 94% by analysing eyes, eyeballs as well as the light reflections from it.However, as the technology is progressing faster, the identification of DeepFakesis going to be more and more challengingshortly. Let’s again consider the signature example explained earlier. If you tell me that I am doing a good job with mimicking your signature, however, I need to give a bit more focus on a particular letter thatneeds some improvements. In that case, I will give more focus on that letter to improve the way it is written. So as far as a weakness is identified with the creation of the DeepFakes remedies are taken to overcome the weaknesses. The DeepFake videos created in the earlier days were easy to spot as the eyes of the face-swapped people in those videos do not blink. But remedies were taken to overcome it and nowadays we have DeepFakes with naturally blinking eyes. So,as the technology is progressing faster it is going to be difficult to spot DeepFakes by humans, and even with some specialized tools.

References

Gilbert A. C., 2021 https://finance.yahoo.com/news/coca-cola-shares-drop-4-122329719.html (Accessed 08 July 2021)

Goodfellow I. J., Pouget-Abadie J., Mirza M., Xu B., Warde-Farley D., Ozair S., Courville A. C., &Bengio Y. 2014 Generative Adversarial Networks, International Conference on Neural Information Processing Systems.

Karras T., Aila T., Laine S., &Lehtinen J., 2018 Progressive Growing of GANs for Improved Quality, Stability, International Conference on Learning Representations.

Kelly M. S., & Laurie A. H., 2021 Deep Fakes and National Security, Congressional Research Service Report

Khanna M, 2021 AI Tool Can Detect Deepfakes With 94% Accuracy By Scanning The Eyes, https://www.indiatimes.com/technology/news/deepfakes-ai-detection-tool-scan-eyes-suny-buffalo-536392.html (Accessed 11 November 2021)

Stupp C., 2019 Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case, https://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402(Accessed 08 July 2021)