Enhancing the Unseen: How Super Resolution Brings Hidden Details to Light in Scientific Imaging

Senior Lecturer Dr. Uthayasanker Thayasivam & Senior Lecturer H.K.G.V.L. Wickramarathna , Department of Computer Science and Engineering, University of Moratuwa

Summary

Microscopic imaging often faces resolution limitations, hindering detailed structural analysis crucial for scientific fields like biology and materials science. While techniques such as SRGAN, ESRGAN, and SwinIR improve resolution, they often fail to preserve structural integrity, impacting tasks like identifying cellular structures and examining material defects. Our research aims to develop a super-resolution technique that maintains structural details, ensuring high resolution and accurate representation.

Keywords:

Super-resolution, Structural integrity, Conditional GANs, SSIM, PSNR

Content

Microscopic imaging often faces resolution limitations, hindering detailed structural analysis crucial for scientific fields like biology and materials science. While techniques such as SRGAN[1], ESRGAN[2], and SwinIR[3] improve resolution, they often fail to preserve structural integrity[1], impacting tasks like identifying cellular structures and examining material defects. Our research aims to develop a super-resolution technique that maintains structural details, ensuring high resolution and accurate representation.

The proposed solution is to integrate structural information into super-resolution techniques. This is achieved by leveraging the intricacies of conditional generative adversarial networks (Conditional GANs) and optimizing them through a structure-informed convex loss function. This approach aims to ensure that the enhanced super-resolution images preserve the structural integrity of the original images.

SRGAN

SRGAN is a generative adversarial network designed for single-image super-resolution. It’s capable of inferring photo-realistic natural images with up to 4x upscaling factors[1].

Why SRGAN?

Early approaches using CNN , interpolation methods(Bicubic,Bilinear) had difficulty in capturing high frequency details and textures[1].

SRGAN Architecture

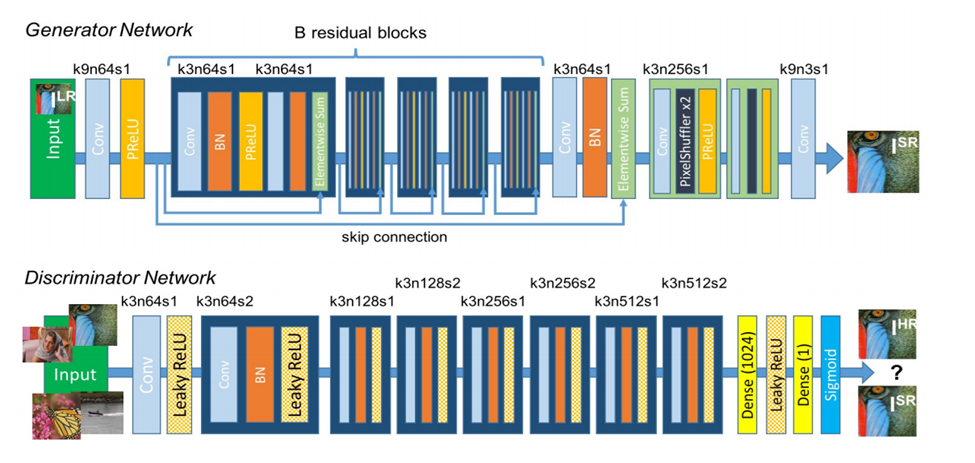

The Super-Resolution Generative Adversarial Network (SRGAN) is a deep learning model designed to enhance the resolution of images. It leverages the power of Generative Adversarial Networks (GANs) to produce high-quality, high-resolution images from low-resolution inputs. The SRGAN architecture consists of two main components: the generator and the discriminator.

Generator:

The generator in SRGAN is responsible for creating high-resolution images from low-resolution inputs. Its architecture is composed of several layers:

Residual Blocks: The generator uses a series of residual blocks, which consist of convolutional layers, batch normalization, and Parametric ReLU (PReLU) activation functions. These blocks help in preserving the image’s spatial information and learning complex features.

Up-sampling Layers: After the residual blocks, the generator employs up-sampling layers (typically using sub-pixel convolution layers) to increase the resolution of the image. These layers help in scaling the low-resolution image to a higher resolution.

Final Convolution Layer: A final convolution layer with a Tanh activation function generates the output high-resolution image.

Discriminator:

The discriminator in SRGAN is designed to distinguish between real high-resolution images and the ones generated by the generator. Its architecture includes:

Convolutional Layers: A series of convolutional layers with LeakyReLU activations are used to extract features and down-sample the input image.

Dense Layers: After the convolutional layers, the discriminator has dense layers that further process the features and lead to a final binary classification (real or fake).

Architecture of Generator and Discriminator Network with corresponding kernel size (k), number of feature maps (n) and stride (s) indicated for each convolutional layer.

Perceptual loss function in SRGAN [1]

- Adversarial Loss: A discriminator network distinguishes between super-resolved images and original high-resolution images.

- Content Loss: Motivated by perceptual similarity, it ensures that the output resembles natural textures.

Measures to Evaluate Structural Preservation

- SSIM (Structural Similarity Index)

Measures the perceptual difference between two similar images. It considers changes in structural information, luminance, and contrast. For two images A and B [4],

μ : mean 𝜎 :variance C1,C2: constants to stabilize the division

SSIM is highly effective in assessing the preservation of structural details, as it mimics human visual perception [4].

- PSNR(Peak Signal-to-Noise Ratio)

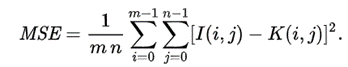

PSNR is a commonly used metric to measure the quality of an image reconstruction or restoration compared to the original image. It quantifies the difference between the original image and the reconstructed image in terms of peak signal power and noise power. PSNR is expressed in decibels (dB), and a higher PSNR value indicates better image quality [5].

Here, MAXI is the maximum pixel value of the original image. Given a noise-free m×n monochrome image I and its noisy approximation K, MSE is defined as

In conclusion, SRGAN is a powerful tool for image super-resolution, capable of enhancing the quality and detail of low-resolution images through advanced Generative Adversarial Networks (GANs). This technique not only improves image resolution but also ensures realistic and detailed outputs. To assess the performance and quality of super-resolved images, metrics such as Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) are essential. By combining SRGAN with these evaluation metrics, we can achieve high-resolution images that maintain structural details, which is crucial for applications in scientific imaging and other fields.

Reference

- Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, ´ Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, Wenzhe Shi Twitter

- ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks Xintao Wang , Ke Yu , Shixiang Wu , Jinjin Gu , Yihao Liu, Chao Dong , Chen Change Loy , Yu Qiao , Xiaoou Tang

- 3. SwinIR: Image Restoration Using Swin Transformer Jingyun Liang Jiezhang Cao Guolei Sun Kai Zhang,Luc Van Gool, Radu Timofte, ETH Zurich

4.Understanding SSIM Jim Nilsson NVIDIA Tomas Akenine-Möller NVIDIA

Authors

Dr. Uthayasanker Thayasivam

Senior Lecturer, Department of Computer Science and Engineering

University of Moratuwa

Ms. H.K.G.V.L. Wickramarathna

Senior Lecturer, Department of Computer Science and Engineering

University of Moratuwa